Meta Ray-Ban Display vs Envision — What Really Works for Low Vision Users

Smart glasses aren’t just flashy gadgets. For people with vision loss, they can mean independence, confidence, and a new way of engaging with the world.

Although I have limited vision, it’s important to me to use and preserve the sight I still have. I’ve tried devices before that completely blocked my natural view and replaced it with a “camera feed,” and I found that approach uncomfortable and disorienting. I don’t want to give up what little vision I still have just to gain new features.

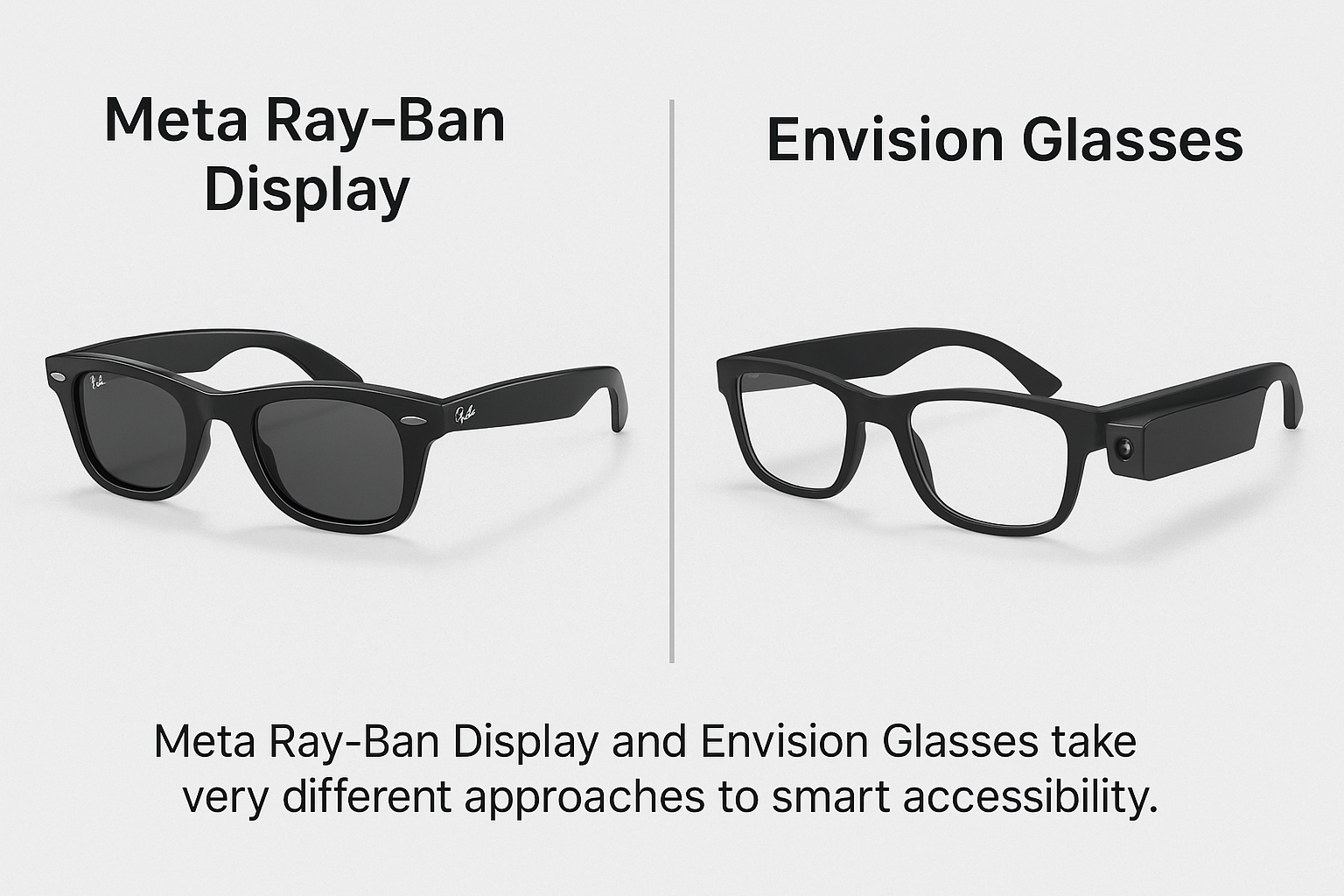

That’s why comparing Meta’s new Ray-Ban Display glasses with the Envision glasses is so interesting — they take very different approaches to accessibility, and one might fit better depending on your priorities.

🔍 Different Design Philosophies

One of the biggest differences is in the purpose behind the two devices.

- Meta Ray-Ban Display glasses were created for a mass-market audience — stylish frames paired with AI and smart features. Accessibility wasn’t the original goal, but many of the functions (object recognition, AI descriptions, hands-free controls) are surprisingly useful for people with vision loss.

- Envision Glasses, on the other hand, were designed from the ground up for blind and low-vision users. Every feature, from text reading to scene description, is focused on enhancing accessibility, even if it comes at a higher price point.

👉 In short: Meta sells fashion with function, while Envision sells accessibility first.

Meta’s focus is style and mass market, while Envision is engineered for accessibility from the ground up.

Meta’s focus is style and mass market, while Envision is engineered for accessibility from the ground up.

For background, you can revisit my earlier article on the Ray-Ban Meta Smart Glasses to see how I broke down their accessibility features.

👀 Seeing Through vs Hearing the World

This is where the difference really hits home.

- Meta Ray-Ban Display: These new glasses add a heads-up display (HUD) in one lens, showing things like text, navigation prompts, or AI feedback. Importantly, the display is off to the side, so it doesn’t completely block your view. That means you can still use your remaining vision while glancing at the display. Engadget’s hands-on calls it “discreet and intuitive.”

- Envision Glasses: Instead of putting anything in front of your eyes, Envision uses an open, see-through frame with a camera mounted near the temple. The camera captures what’s in front of you and narrates it through built-in speakers or earbuds. Your vision isn’t covered at all — you continue to see what you naturally can, while hearing descriptions of text, objects, or people.

For me, this difference matters. I want tools that add to my vision, not take it away.

Meta Display adds prompts without fully blocking natural vision.

Meta Display adds prompts without fully blocking natural vision.

💬 Real-Life Scenarios Compared

Rather than just listing specs, let’s talk about how these devices work in daily life situations.

🏞️ Navigation Outdoors

- Meta: Connects with GPS for turn-by-turn audio, plus the HUD can show directional prompts. But glare or outdoor lighting may affect the mini-display.

- Envision: Narrates signs, describes intersections, and can identify landmarks. It’s audio-first, which may help or hinder depending on how much noise is around you.

🍴 Reading Menus or Signs

- Meta: You can snap a picture or ask the AI assistant to read text aloud.

- Envision: Built specifically for this. It reads print, handwritten menus, and even food packaging labels aloud.

If your main challenge is reading text quickly, Envision is far more reliable.

Envision Glasses read printed menus and signs aloud, making everyday tasks more accessible.

Envision Glasses read printed menus and signs aloud, making everyday tasks more accessible.

👥 Recognizing Faces and People

- Meta: Face recognition isn’t its strong suit yet; it’s more about general AI descriptions.

- Envision: Has dedicated face recognition, designed to help you know who’s nearby.

🏡 At Home

- Meta: Great for quick “what’s in front of me” AI prompts. But it may struggle with small labels or cluttered shelves.

- Envision: Stronger at reading labels, identifying colors, or helping with organization.

💵 Price and Practicality

Here’s where the gap really widens.

- At launch, the Meta Ray-Ban Display glasses retailed for around $799, but you can often find them for significantly less on Amazon — sometimes under $300 depending on the model and features. The Envision Glasses, on the other hand, remain a higher-end assistive device, often retailing around $3,500

- Check current pricing for the [Ray-Ban Meta Smart Glasses on Amazon.

- Envision Glasses cost closer to $3,500+, and are usually purchased through assistive tech providers or directly via Envision’s website. Some users may qualify for grants or assistive technology funding, depending on location.

If cost is your biggest concern, Meta wins. But if accessibility is the top priority, Envision’s specialized features may justify the investment.

Meta focuses on general AI assistance, while Envision offers specialized accessibility features.

Meta focuses on general AI assistance, while Envision offers specialized accessibility features.

✅ Pros and Cons for Low Vision UseMeta focuses on general AI assistance, while Envision offers specialized accessibility features.rs

Meta Ray-Ban Display

✔ Stylish, discreet, and mainstream

✔ Affordable compared to assistive tech devices

✔ Useful features like audio navigation, AI descriptions, HUD

✘ Not designed specifically for accessibility

✘ Display may be distracting or cause eye strain

✘ Features depend heavily on AI accuracy

Envision Glasses

✔ Purpose-built for blind and low-vision users

✔ Excellent for reading, object recognition, scene description

✔ Open design preserves natural vision

✘ Very expensive

✘ Performance can vary with lighting and connectivity

✘ Limited to niche support and ecosystem

🔮 Other Players to Watch

While Meta and Envision are the hot names right now, they’re not alone:

Esight: Electronic glasses with magnification, but they fully cover your eyes (not great if you want see-through).

OrCam MyEye: A small camera device that clips onto regular glasses for text reading and object recognition.

Apple’s rumored AI glasses: Apple is reportedly shifting resources from Vision Pro to accelerate development of smart glasses. These could shake up the field if they launch in the next few years.

Other players like Esight, OrCam, and Apple could shape the future of smart glasses accessibility.

Other players like Esight, OrCam, and Apple could shape the future of smart glasses accessibility.

🙋♀️ My Takeaway

For me, the ability to preserve what little vision I still have is the deciding factor. That makes Envision’s open design very appealing, even though the cost is steep. Meta’s new Display glasses are exciting for their affordability and style, but I wonder if they’re accessible enough for daily low-vision use.

👉 If you’re curious about mainstream options, read my earlier review of the Ray-Ban Meta AR Smart Glasses.

👉 If you’re exploring purpose-built tools, check out my posts on Magnifier Apps vs Handheld Magnifiers for a look at other everyday accessibility tools.

✨ A Few Words Before You Go

Smart glasses are evolving quickly, and no single device will fit everyone. Meta’s Ray-Ban Display brings mainstream affordability, while Envision delivers specialized accessibility at a premium.

My best advice? Think about your personal priorities:

- Do you want to see through and preserve your vision?

- Or do you prefer hearing a narrated world with specialized features?

- Is cost or functionality your bigger concern?

I’d love to hear from you. Have you tried either of these glasses? Which features matter most to you? Share your experiences in the comments — your perspective could help someone else make an important choice.